By Megan Czasonis, Huili Song, and David Turkington

We show that LLMs can effectively extrapolate from disparate domains of knowledge to reason through economic relationships, and that this may have advantages over narrower statistical models.

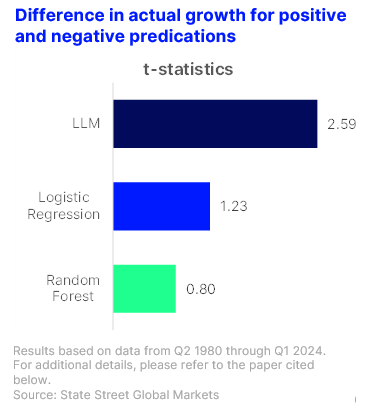

Fundamentally, large language models (LLMs) and numerical models both learn patterns in training data. However, while traditional models rely on narrowly curated datasets, LLMs can extrapolate patterns across disparate domains of knowledge. In new research, we explore whether this ability is valuable for predicting economic outcomes. First, we ask LLMs to infer economic growth based on hypothetical conditions of other economic variables. We then use our Model Fingerprint framework to interpret how they use linear, nonlinear, and conditional logic to understand economic linkages. We find that their reasoning is intuitive, and it differs meaningfully from the reasoning of statistical models. We also compare the accuracy of the models’ reasoning using historical data and find that the LLMs infer growth outcomes more reliably than the statistical models. These results suggest that LLMs can effectively reason through economic relationships and that cross-domain extrapolation may add value above explicit statistical analysis.