By Megan Czasonis, Yin Li, Huili Song, and David Turkington

Our innovative "interrogation" method detects unreliable machine learning predictions in advance, overcoming limitations of the traditional cross-validation method.

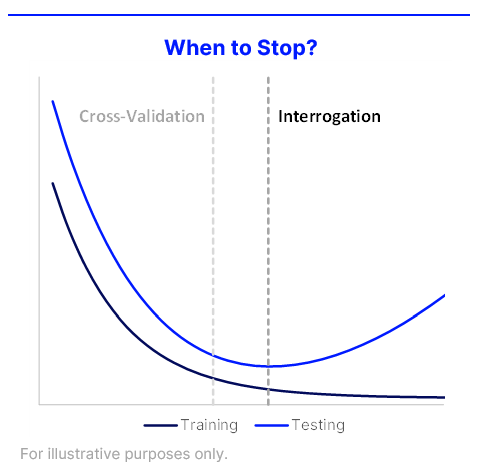

We introduce a new method called "interrogation" to warn when a machine learning model has underfit or overfit a data sample, offering a more efficient alternative to traditional cross-validation. Unlike cross-validation, which can be cumbersome and computationally expensive, interrogation evaluates models trained on all available data by breaking down their prediction logic into linear, nonlinear, pairwise, and high-order interaction components. This method successfully identified near-optimal stopping times for training neural networks without using validation samples, boosting confidence that models are well-calibrated and can perform reliably on new data. Interrogation is model-agnostic, providing transparency and reliability even for black-box models.