By Megan Czasonis, Mark Kritzman, Fangzhong Liu, and David Turkington

Relevance-Based Prediction can assess a prediction’s reliability from the consistency of the information that forms it, providing a novel perspective that complements conventional measures of confidence.

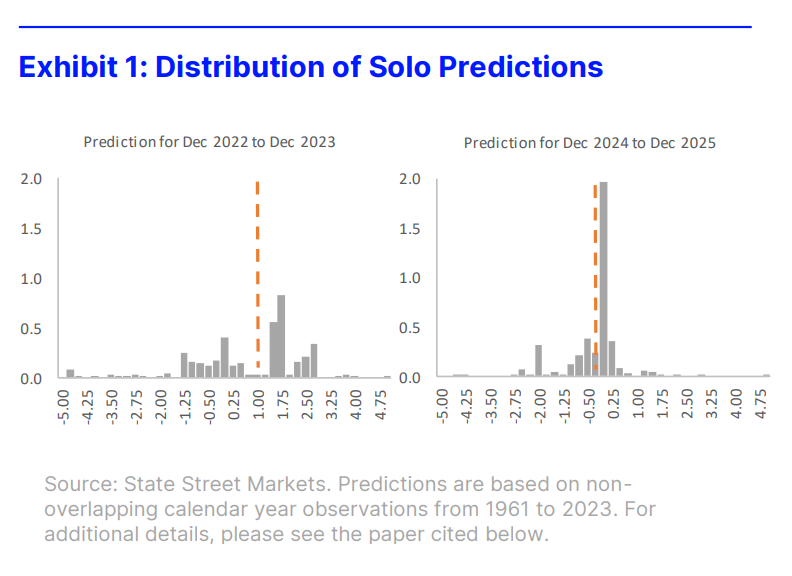

Prediction is like a voting process. Each datapoint casts a “vote” for the unknown outcome, and the final forecast averages these diverse views. But to know how confident we should be in the average, we need transparency into the votes that went into it. Linear regression and machine learning models can’t offer this visibility because they estimate parameters and then discard the data. However, as we show in a recent paper, Relevance-Based Prediction, a model-free technique, can assess the reliability of a prediction from the distribution of information that is used to form it.